Current Project

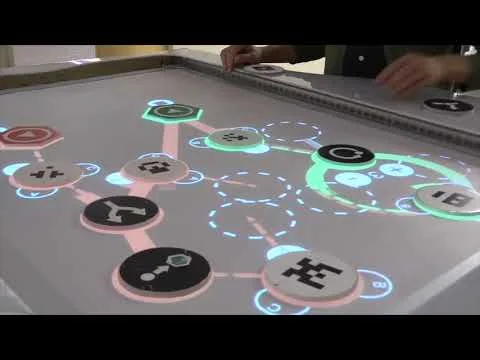

As AI becomes increasingly embedded in everyday life, understanding its underlying principles is crucial, yet educational resources for non-experts remain scarce. To address this, we developed three interactive web-based tools designed to demystify core AI concepts — edge detection, confidence thresholds, and sensitivity — through hands-on, engaging experiences.

Each tool was crafted using user-centered and learning sciences principles, allowing users to interact directly with complex AI processes. For example, “The Art of Edge Detection” visually explains how AI recognizes boundaries in images, while the “Confidence Calibration Explorer” and “Toggling Sensitivity” tools introduce users to the concepts of reliability in AI decision-making and algorithmic trade-offs. These tools empower users to adjust parameters, offering real-time feedback that helps deepen their understanding of AI’s strengths, limitations, and ethical implications.

In a study involving 42 adult participants, we found that engagement with these tools increased participants’ familiarity and confidence in discussing AI concepts. A mixed-methods evaluation highlighted interaction patterns like critical thinking, ethical considerations, and real-world application, showcasing how adult learners actively make sense of AI technologies.

This project not only contributes new educational tools to the AI and XAI communities but also provides valuable insights into designing effective, transparent, and learner-centered AI interfaces. The tools are accessible online, supporting a more inclusive AI literacy framework and encouraging ethical, informed AI engagement across diverse user backgrounds.

Publications

Designing Interactive Explainable AI Tools for Algorithmic Literacy and Transparency (DIS 2024)

Other Resources

AI Explainers

Collaborators: Maalvika Bhat